sudo apt install python3-venv In this case the installation seems to complete, but when I try to create a virtual environment with python3 -m venv. With all our setup, we are good to go, we can now start the Docker containers and go to the Spark and the Airflow UI to confirm that all. Now our project looks like this:2) Installing venv through apt and apt-get. Step 4: Start and Run the Spark and Airflow Application. The operator takes a config structure passed to the parameter jobflowoverrides. Voilà! A virtual environment has been born. Airflow has an operator included in MWAA which is used to create the EMR cluster, called EmrCreateJobFlowOperator. % cd test-project/ % python3 -m venv venv/ # Creates an environment called venv/ ⚠️ Note: You can replace “venv/” with a different name for your environment. All we need to do is execute the venv module, which is part of the Python standard library. Supports virtual environments created using: venv (part of the standard library, preferred method) virtualenv (older tool, Python 2 compatible) See conda-pack for a similar tool made.

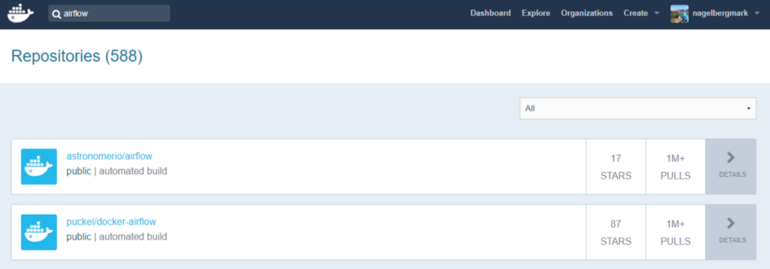

AIRFLOW DOCKER EMR CODE

This is useful for deploying code in a consistent environment. venv-pack is a command-line tool for packaging virtual environments for distribution. Install Docker container on the instance, set up the non-sudo access. We used following commands to create new environment, install dependencies and then pack the environment. while performing a batch processing ETL job through S3, Glue, and EMR and the. To package, though we had to use venv-pack library to package the environments so that those could be shipped to wherever we need them for running.

There are 2 ways to submit spark job to EMR.

0 kommentar(er)

0 kommentar(er)